I made two mistakes yesterday. First I installed DISQUS to replace this blog’s commenting system. It was a noble essay, but for several reasons it just didn’t work out. My apologies to the fans of DISQUS, something with obvious advantages, but it’s not something that works for me here. Probably a number of comments from yesterday are lost for good.

The other mistake is more embarrassing. I said over at John Woodman’s blog, and I think here also, that I had scanned my own birth certificate using Adobe Acrobat, and that the optimization created layers and that different areas of the certificate had their own layer. I did that experiment a year ago, and what I just wrote is true. However, recently I said that more than one of those layers was a 1-bit layer and I can’t back that up. There were multiple layers in obvious color and multiple layers in obvious not color, but I cannot say that two of them are 1-bit. I’m downloading a trial copy of Adobe Illustrator now to see for sure. Previously I had just used Acrobat to export the images, but I know that one doesn’t get them all. With Acrobat image export, one doesn’t get any 1-bit layers at all (the background is 8-bit grayscale). The point is not whether I was right or wrong, but whether I reported the experiment accurately, and it turns out that based on what I had to work with at the time, I could not have supported the conclusion that I remembered.

I’m not going to lie to you, but I make mistakes and it is always appropriate to ask me for sources or evidence to back up a claim.

I was delivered by Dr. Webb and on my birth certificate the doctor’s name is “We” on the background layer and “bb” on a foreground layer. That’s the same kind of broken words and random layer assignment that we see on the Obama form. This is one reason that I believed last year, and still believe today that the layers are on the Obama certificate are too crazy to be the result of intentional human action.

Garrett Papit, the latest darling of the birther image analysis faction, ignores the crazy aspects of why single letters get broken into different layers while focusing on certain logical units of information that do hold together, such as the Registrar’s stamp. This is the operation of confirmation bias: accepting things that seem to support the bias, and downplaying or ignoring things that go against it. The registrars stamp is a distinct area surrounded by a broad boarder of background and a software algorithm isolating it doesn’t seem all that strange, nor the smoking gun of human intervention.

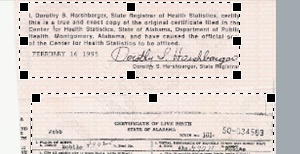

My birth certificate is in two parts. The top is the certification text with the signature of the state registrar when the certificate was printed for me and the seal. The bottom is the image of the original certificate. It’s all on a single sheet of security paper. When I scan this document using the HP Photosmart scanner utility, it thinks that I have two documents and it tries to save them as separate images. This isn’t PDF software, but it is an example of software somehow recognizing a logical division in a document, a registrar’s stamp even (!), without being asked to. I’ll show you a screen shot of what I mean:

By the way, my birth certificate is the only thing I’ve ever scanned that the software splits up this way. I had always considered it an annoyance until now.

My resume can be written to sound as impressive as that of Mr. Papit, and under scrutiny neither of us is an expert. But it’s not my word against his. It’s my sources against his. While I have, for example, found articles describing a halo effect around text in a compressed PDF file, he simply asserts that there aren’t any. I’m no more an expert than Papit is, and given his interest, he probably knows more than I do about PDF optimization. However, I don’t rely on my own expertise, and just about everything he says is on his own authority. So when I say that you should ask for sources and evidence, you should ask Papit too.

Doc, the only real mistake you made is the time that you thought you made a mistake.

Garrett Papit and the Parakeet have a spirited discussion:

http://naturalborncitizen.wordpress.com/2011/05/24/im-not-who-you-think-i-am/#comment-17896

Saw that. Strange dialog, but an Evangelican and Donofrio are on different planes.

saw the new comments format yesterday after coming back off hols……er…not good.

now much improved, cheers doc.

I’m glad you changed back. The new comment format was confusing. I might have grown used to it after some time, but I’m glad I don’t have to.

Re: “However, recently I said that more than one of those layers was a 1-bit layer and I can’t back that up.”

That proves conclusively that Doc was born in Kenya.

by the way, I love you spelling “Mistakes” as “Misteaks” in the title, comedy gold!

Huzzah! I got my Punchmaster credentials back!

it’s much easier to admit mistakes when you hadn’t been involved

in the typical insulting/ridiculing that seems to be the standard here.

The Dr.C. is even more civilized than the average, still he wrote

> you don’t have a clue what you are talking about

> I got multiple 1-bit-layers, so that alone tells me

> you’re utterly clueless and the claims of all your

> ‘experiments’ are worthless

but then he corrected it when he realized it and even started this thread,

which I think most other people here hadn’t done

However, Doc isn’t some of the artifacts that you witness just part of the security features? Doesn’t governments have introduce technology to make it very difficult to scan official documents such as birth certificates and paper currency? Just a thought.

That’s medium-rare humor.

Well done.

Sadly, by birther standards, since you have now (actually, again, as you are unfailingly honest about it) admitted to having made a mistake, everything that has ever been written on this board by you or anyone else is now discredited.

What a craven attitude you are championing!

If being made fun of for being wrong leads you to reflexively double-down on being wrong, then there is something very, very flawed and childish in your mindset. You only end up making things worse for yourself in the process.

Real men don’t have a problem admitting mistakes and don’t have to live their lives like a pouting yet fragile egg…

It is called integrity. Thanks, Doc.

Scanning is simply taking a picture, in a linear fashion, with a very shallow focal depth, but still optically. If you can take a photo of it with a camera, you can scan it, or at least part if it. Well, ok, drum scanners require flatness. Other purpose-built scanning techs are also limited, i.e. slide scanners are limited to a certain size of transparencies.

But I know what you mean. Yes, there are ‘security features in money:

http://www.secretservice.gov/know_your_money.shtml

And special patterns on paper. These make reproduction difficult, not scanning. Some of them generally will not scan at all (watermarks), or not convincingly (color-shifting ink won’t shift in a still image, nor will metallics by metallic).

All that said, patterns and color variations can give some compression schemes fits. For instance, the can cause “halo’ing” from MRC’s masking to be more prominent. They can cause false positives for edge detection / character recognition algorithms.

So, yes, some security features will not scan. But no, they don’t necessarily cause artifacts. A simple, scan to an uncompressed rastered image would have no artifacts, beyond normal pixelization, and definitely not in the birther sense. If scanned in a way that inherently creates ‘artifacts’, security features can amplify their frequency and magnitude.

Depends. I think the “VOID” all over Mittens’ BC is caused by a security feature that makes these letters appear under conditions such as basic scanning.

Papit makes the claim based on his selection of software and parameter settings but fails to understand that MRC is notorious for making halo-like effects. You can reduce these effects by 1) data-filling the background 2) smoothing the text edges. In Papit’s examples the background is clearly data-filled unlike the background in the Obama PDF which leaves is blank. DJVU has a setting for example which allows you to fill or leave blank such gaps, if not left blank, the software tries to fill the gap using external hints.

Understanding MRC and the many variations as to how the segmentation, separation and optimizations take place would help Papit understand why his quest is doomed. He is trying to prove a negative which requires him to assert that MRC does under no circumstance create a halo, which is something we know to be false. But without such a statement, proving a negative becomes quite problematic as the parameter space is quite large and Papit has only a fraction of the software that exists.

Furthermore I believe that some hints suggest that OCR was used and that the text layer was later removed, as is typical in for instance law firm workflows. No reason to provide your opponent with searchable scans…

I have been discussing this at obamabirthbook.com with some response from Papit. But I guess he still digesting the bad news.

Hard to tell on that one. I still think , based on the crazy angle, etc., that that was photocopied or fax’d under duress LOL

But yes, scanning is similar to photocopying, any ‘invisible’ writing that shows up under harsh light could be highlighted by scanning. It would be interesting to see a color scan. I haven’t had the pleasure of handling any documents with that particular feature. For instance, this variation:

http://www.highsecuritypaper.com/features–hiddenmessage.html

… appears to be a buried color pattern of red microdots in a sea of blue. on a color scan it appears normal, but on a photocopy, the light blue falls out and suibtle reds go to black.

This one, looks far more interesting:

http://www.adlertech.com/Sentinel.htm

Of all the things about the “It was completely computer-generated!” crowd, the argument that a forger, with access to other original and real documents, plus signatures of the people involved, would choose to put random letters from the middle of a signature into a different layer – as if they had a signature, and said “Oh wait, that ‘a’ doesn’t look right”, and rather than get another example of the signature, cut out just that “a” and pasted in a new one, is to me the funniest.

They attribute things that no human being would do when creating a document because they are overly and ridiculously complex, as proof that a human being had to have done them, rather than attributing them to the new logical explanation of computer algorithms sometimes produce odd and/or boneheaded results, and can’t necessarily tell that all the letters in a signature are part of the same object.

Furthermore, the few times a forger, had there been one, WOULD have logically used photoshop – for the date in the SS registration, to make sure there were 4 digits, they attribute to an even more ridiculous “Cut a stamp in half and turned it upside down” explanation.

Papit’s argument has been that when you say that, you’re “reading the forger’s mind” — how do you know a human being wouldn’t do that? (Otherwise known as the “Criswell defense“)

Of course, this is exactly what he’s trying to get away with as well. He’s assuming that there is no software on the planet that would automatically create what we see in the PDF, even though there is no way he can prove that. Since there is no such software (source: “because I say so”), the document had to have been tampered with.

When it’s pointed out to him that there is no way to prove this negative, he then says all we have to do is give one concrete example of software that behaves this way.

…which would be the equivalent of you asking him to give one concrete example of a forger behaving this way, but I don’t think he’d like that request very much.

He then skates away from what this all might possibly mean. He specifically denies that he is saying Obama was born in Kenya, or any other consequences of the putative tampering; he just says, “Hey. Tampered. You can draw your own conclusions as to why.”

Which is not hard to do, but would then be immediately questioned. It’s endless tailchasing.

Most posters here have no problem admitting when they are wrong. Dr. Conspiracy started this thread because it is his blog.

Speaking of “Misteaks”, anyone else notice how WND is saying nothing about their favorite sherrif’s trail?

Happy trials to you, ’til we meet again.

DOH! XD

The typo monster strikes again.

They are so confident of the outcome, coverage is not merited. 😉

Come to think of it, I don’t think they have ever mentioned it … have they? not even in the vein of “Obama persecuting Arpaio for daring to seek the truth”?

huh and Papit is anti-gay as well, that’s a real shocker.

There is no bias in my report. My focus was on optimization and I point out the many differences between what one would expect from optimization and what you see on the Obama PDF file.

How many applications do you have to test, which return identical results, before it is a conclusive sampling on which to base a scientific claim?

And for the record, the other side has claimed that the WH PDF is the result of optimization yet they don’t provide one example with similar properties. Yet, I perform hundreds of tests over a 6 month span and you find their evidence more compelling. Quite telling in my book.

It is what it is. You guys will believe what you will believe. The sad thing is you don’t even know what I believe. I’m not convinced Obama wasn’t born in HI. There is evidence he was and also circumstantial evidence that he wasn’t. My only claim is that the PDF shows signs of tampering.

And it isn’t just the multiple 1-bit layers. The form lines should be evenly distributed throughout the text layers and the color properties of the text are inconsistent with optimization. Not to mention the file was created by Mac Preview…which isn’t capable of creating layers itself. Does it prove the PDF is a forgery? That is up to each person to decide. But it is still compelling evidence that should be examined.

Garrett: It isn’t the number, but the quality. 600 or 6,000 or 60,000 invalid experiments don’t produce a valid conclusion. You simply don’t know what steps were followed in generating the original pdf, nor what equipment was used and what settings. You are trying to make an apple pie starting with oranges and saying that you tried 600 recipes, but none of them worked, so apple pies don’t exist.

Let me add that in my lab career, I have often needed to reproduce published work. The first thing I do is try to start with EXACTLY the same materials that the authors used and follow their protocols to a tee. If I can’t obtain them, I keep my mouth shut. I don’t substitute rat liver for monkey brains and then yell “FRAUD!” if I get a different result.

Mr. Pappit, in an effort to apperar to bring science to bear, says there is circumstantial evidence the President was not born in Hawaii.

Would you care to list the circumstantial evidence?

Here is an elementary question:

Is it possible to have multi-layered MRC that produces seven or eight masks (each containing text of different grayscale values), a background layer but no foreground layer?

There is a data point for this: an optimized document, posted on the White House web site.

You are arguing that because you, Garrett Papit, cannot determine how this optimized document was created, and because no one else has explained how this optimized document is created, “the evidence suggests that this file has been tampered with.”

To bolster this conclusion, you have made claims that cannot be supported, most specifically that no optimization method in existence can use multiple 1-bit mask images. You don’t “suggest” this; you have categorically stated that “optimization creates only one single 1-bit text layer.”

Even more broadly, you have said “Recall that an optimized file has only 1 transparent text layer,” which is incorrect no matter what your stand on multiple 8-bit vs 1-bit layers is.

You have also categorically stated that optimization cannot create halos, despite canonical sources to the contrary.

You have also fudged the test results you have displayed, by inexplicably switching from safety paper to a solid color background (figures 47 through 49).

You have said, “There would be no logical need to resave the PDF within Preview” although logical reasons exist, while at the same time arm-waving away any attempts by anyone else to ask why a forger would have any logical need to, for example, create a date stamp layer with missing characters, added squiggles, and part of the text from the form itself.

I haven’t gone into this in nearly the detail of others here, but those are the points that stand out to me.

can’t we identify the algorithm by the outcome ?

There are much fewer meaningful algorithms than applications

(+settings…)

“There is no bias in my report.”

Funniest line of b.s. I’ve read today so far.

I wonder how much Farah and Corsi are paying some of these birthers?

Their ridiculous and easily debunked line of crap reeks of desperate political smears.

Everyone should be suspicious of anyone claiming to have circumstantial evidence who can’t produce any credible evidence whatsoever.

I’ll stick with the credibility and legal authority of the state of Hawaii over bumbling, wannabe experts, birther bigots and sad clowns with a crap stained political agenda.

From the outcome, the algorithm is Mixed Raster Content (MRC). It is axiomatic that it is MRC, because it is an optimized document composed of a mix of rasters. It fits all the descriptions of MRC in various technical papers.

But this does not satisfy Mr. Papit; he would like to know (and sure, I’m curious, too) what created this MRC output.

Actually, Mr. Papit claims that it cannot be MRC, because the definition of MRC precludes multiple transparent layers. This is not true.

He also claims that MRC does not produce halos. This is also not true.

He further claims that because he has made hundreds of tests, he has exhausted any possibility of a piece of software having created this output. (This is a logical fallacy.) Thus, “the evidence suggests that this file has been tampered with.”

I don’t want to put words in his mouth, but my reading of “this file has been tampered with” means “a human being rather than an automated algorithm was involved at some point.” He may feel free to correct me if I am misrepresenting his claim.

This would mean that, as one part of the process, a human being would have created the halos. He does not of course make any conjecture how the human being would do this. A pixel editor? That seems quite labor intensive. But, you know, “we can’t read their minds.”

But the questions hanging out there remain:

* Why would someone do this?

* If they did do this, given that the State of Hawaii verifies all the data that is displayed, how does this matter in the slightest?

But there is one such example right on the White House website, which is an important fact BECAUSE the State of Hawaii verifies its information, and even provides a link to that exact image. Thus, talking about a forged PDF is *ridiculous* from the very beginning.

If you want to waste your time more productively, I would suggest you focus on how and why someone planted birth records at Hawaii’s Dept. of Health, and how and why someone faked the birth notices in the newspapers. Motive, means, and opportunity.

Do not worry. The day you actually come up with compelling evidence is indeed the day you will become famous for uncovering such deception. You won’t have to wonder whether or not it is compelling evidence. But that day is never going to happen if you just play around with meaningless reports about how you think PDFs should look.

You can perform hundreds and hundreds of tests to tell me this here is not a rock, because you say it should be smooth and homogenized by years and years and years of being in the ocean. Never mind that it comes from a glacier, smashed up against and with other layers of rock. After your arguments are finished, I still have a rock in my hand, and the President is still our president.

Perhaps there is circumstantial evidence he was born somewhere else (although while Corsi et al claim so often they don’t ever actually produce this evidence). But said evidence is blasted to smithereens by ACTUAL evidence, such as the fact that the state of his birth says he was born there and verifying the certificate posted online as valid.

No analysis you do now, or ever, can get past the state’s verification of the certificate, at least not at the state level. The Full Faith and Credit clause, to first order, means as soon as the state says “Yep, it’s good” the matter is closed with respect to other states asking about it (which is probably one reason why the CCP never bothered to ask).

I address that philosophical question in my article: Counting Crows.

Even serious scientists often go awry sometimes due to unrecognized biases or omissions in sampling. Scientists review each others’ work and sometimes find errors; however, I have not seen a list of all of the software you sampled. Did I miss it, or have you not published it? If you haven’t published it, then you have not made a scientific claim. You’re just asking us to trust you.

After all, the literature has shown that you totally missed the mark claiming that halos aren’t created by compression. That alone proves your sampling is defective. Was this because you failed to test sufficient software to find something that we know exists? I think this is a serious challenge to your methodology and ought to bother the heck out of any honest researcher.

Dr. C. I provided a partial list in my document. There is a more comprehensive version, about 50 pages, that I think will be published in the near future that lists even more. However, I only listed the applications that actually created layers at all. There were numerous optimization and compression apps that didn’t even get that far.

BTW, I do appreciate you admitting that you can’t back up the multiple 1-bit layers assertion.

As far as the haloing, from my research I have not seen MRC create even the faintest halo. I would be very surprised to see it to the degree we see it on the WH PDF file even if MRC can create halos. Can you provide a link to the documentation of this fact? I missed many of the technical points that may have been made on Woodman’s blog due to the cross-talk and lost interest after about 1000 unrelated insults.

However, my opinion is still that MRC was never run on this document anyway. The MRC format, by definition, contains a layer to retain the color information for the text that was converted to black and stored on the single 1-bit layer. Someone on Woodman’s blog apparently claimed that this isn’t always the case, but I would like to analyze his claim as well.

Let’s put it this way. Even if there is some magical application out there that will provide all of the oddities on Obama’s BC, and I’m still extremely skeptical, don’t you find it odd that such an obscure method was used on a document that was destined to be held up to extreme scrutiny? Put another way, even if someone can prove that an innocent process created these issues…I would contend it was in no way innocent.

At best, we have someone in the WH who thought it would be funny to find the most destructive and suspicious method of creating this digital file possible. At worst, we have someone who was trying to obscure something else. That is my opinion.

I can’t make any claims about the underlying document as nobody has been allowed to examine it. If they were, it would have been court certified forensic document examiners..and not myself. Coincidentally, I suppose (tongue in cheek), no physical document has been presented to do such.

Doc-As I stated on the other thread, I don’t think the number of attempts is really a relevant factor. The number of possible combinations of software, hardware, settings and starting materials (types of original documents) is perhaps not infinite, but is doubtless a number larger than anyone could get through in a lifetime.

On Woodman’s site, I did encourage Papit to do what real scientists do, write his work up and submit it to a peer reviewed journal. I am not in the field, but surely there are journals in computer forensics. He needs his work reviewed by unbiased experts, not a car salesman.

http://image.unb.br/queiroz/papers/icip09zaghetto.pdf

His claim that MRC only produces one layer of text is also mistaken.

“…starting from a basic background plane, one can pour onto it a plurality of

foreground and mask pairs as in Fig. 15.1(c). Actually, the MRC imaging model allows

for one, two, three or more layers.”

And you can have mask layers without any foreground color layer.

“Less than 3 layers can be used by using default colors for Mask and/or foreground layers.”

http://signal.ece.utexas.edu/~queiroz/papers/doccompression.pdf

Assuming that were true, so what? This a contact sport-politics- and anything you do within the law to discombobulate your political opponents is permissible. And no scanner setting is illegal, so far as I know. The Rs have been doing that for decades; good to see the Ds fight back, if that is what they did.

By the way, I don’t think that is what happened. I think they used the typical settings they use on most documents and this one behaved oddly because it is not a letter or report on plain paper, but a document on security paper. You have not shown anything that negates that scenario.

Garrett,

Is it possible to have multi-layered MRC that produces seven or eight masks (each containing text of different grayscale values), a background layer but no foreground layer?

I don’t question that there are endless combinations when considering things like ink differences, paper differences, hardware and software differences…but the question is whether those variations change the fundamental properties of the file or just the surface elements built on top of those fundamental properties.

Also, I did use multiple printers and scanners for my testing..ranging from bottom of the line Kodak Easy Share to $4000 units. In terms of the paper, we know it was a piece of safety paper from HI…and that paper has standards. As far as hardware, I tested on both Macintosh and PC…I doubt the amount of memory, storage or CPU power is going to affect the logic of a software application. 🙂 I also used a control document that had printed form data and a manual date stamp using an ink pad to test if ink differences were treated differently. Finally, I did test every possible combination of settings within the applications used….and it was a mind-numbing process I assure you. Testing Acrobat 6, 7, 8, 9 and X alone took a couple months as it contains one of the most advanced routines with the most modifiable settings.

The take away was that, in every case, the fundamental properties were the same. There was only a single 1-bit image mask layer, the form lines were evenly distributed throughout the layers that contained text, the color properties differed from the WH PDF (the difference varied based on whether app utilized MRC or adaptive [ie. Acrobat]) and no appreciable white halo was created.

Again, if I could have replicated the fundamental properties…even without replicating the exact details…I would have turned that info over to the CCP.

Just from my extremely limited knowledge of PDF software that does MRC, there were a couple of glaring omissions from your list that will perhaps be on your longer list. I don’t recognize everything on your list, but from what I do recognize, it looks like you just used packages that provide a free demo version download.

However, the thing that bothered me most is that you didn’t include any of the hardware solutions that are ubiquitous in businesses (my company with 20 people had one) like the Xerox WorkCentre series that would be someone’s first guess on how the White House document was scanned.When I was working and I needed a document in PFD form, I would just stuff it in the machine and say “Scan to PDF.”

http://www.office.xerox.com/latest/W5BBR-01.PDF

I found one report on the Internet of someone who scanned a document with an unspecified model of the WorkCenntre and got layers.

http://www.chicagomag.com/Chicago-Magazine/The-312/March-2012/The-Obama-Birth-Certificate-and-the-Layers-Conspiracy/

I would ask that commenters here focus on reasoned discussion of technical points. Otherwise anything of note might get lost in the noise.

LW, is the source code for mixed raster conten compression public ?

And the source code for displaying pictures from .pdfs

——————————–

Garrett Papit, I think the chance for “unrelated insults” is bigger here than

on Woodman’s blog … it depends on the people who happen to post, of course.

Ignore it.

Yes, it’s odd that the WH used software that we can’t figure out.

And that they won’t comment about it.

But I do see no signs of foreign birth here, it makes no sense.

————————

gorefan, of course it’s possible.

Just write it. But would it be popular ?

or would it make sense ?

I currently know of at least four images of the document:

* What I’ve seen called the “AP” image, actually the paper copy handed out at the presser.

* The PDF

* Savannah Guthrie photo 1

* Savannah Guthrie photo 2

The last is poor enough quality to be of little use (unless you’re trying to convince people that the master forgers don’t know how to spell “Hawaii”), but the “AP” image has details that the PDF does not.

Key points:

* All of these images contain identical data

* The State of Hawaii confirms that this identical data matches their records.

* The AP image cannot have been generated from the PDF.

I would think that any analysis of the PDF has to take the AP image into account. And what the AP image says is: analyzing the PDF is an exercise in navel gazing.

I have a question that I haven’t seen discussed. Everyone assumes that the pdf was made at the White House from the paper document provided by Hawaii. But couldn’t Hawaii have made the pdf? They had to copy the original and could have made the pdf at the same time and provided it to the attorney on a thumb drive or sent it by Email. I don’t know of anything that rules this out.

Why would companies provide their competition with the source code for their proprietary software?

As I said elsewhere, I see nothing to indicate that you tested any of the commonly-used scanning devices that create PDF files directly. In a business environment (in my experience) this is the most common way of scanning documents.

When I heard the Cold Case Posse press conference they said software on “Mac and PC” and I immediately thought, what about Xerox?

There is not one “mixed raster content” for which there would be source code. It as a concept with many optional aspects to it, and myriad implementations. That’s why saying “no, this just couldn’t be MRC” is a reach.

I don’t know if anyone has a free software MRC implementation.

I know of at least one free software PDF reader off the top of my head, Evince. There are probably others.

Looking at the halo in that example, it is a pinstripe in terms of thickness around the letter and is perfectly uniform. I wouldn’t even call it a halo so much as an outline. I think this is a terminology issue rather than a technical one. By contrast, the halo on the WH PDF varies in distance from the letter border and is sometimes as wide as the letter itself…even obscuring the background in the gaps between letters and within letters.

To me, the two aren’t comparable at all. The only way I’ve seen a halo generated to the degree we see on the WH PDF is using an unsharp mask in PS or another graphic editing app.

Nothing rules it out, but it would be strange that they would not have mentioned doing so when they did describe how they made the paper copies.

(The datestamp on the Preview output is from shortly after the presser, but that’s not incompatible with your scenario.)

They certainly didn’t use typical settings available in any of the better known applications…I’ve tested those. Also, I downloaded dozens of other PDF’s from the WH web server…using a PDF search engine…and the BC PDF is the only one with layers at all. I guess they just decided it would be fun to use different settings on this one? 🙂

That isn’t completely accurate. There is one mixed raster content model on which applications are designed. Again, I’ve yet to see one that produces more than a single 1-bit layer. Add to that fact that adaptive compression, utilized by Acrobat, only creates 1 1-bit layer as well and you begin to see a pattern. Obviously the people who design these applications think that is the most efficient means of doing it.

Again, all one has to do is find the application or hardware device, Xerox as Dr. C suggests, that produces it and you have solid evidence.

I think HI has stated they provided paper copies and not digital ones.

Sometimes when there’s smoke, there’s a bunch of birthers giggling over their smoke machine.

That is no different than a scan of a document. Those are still digital images of the document. And each of the versions actually differ from the other in minor ways.

For instance, the Guthrie photo doesn’t show the smudges on the right border of the document which are visible when you release the clipping mask on the WH PDF file. If it was a scan of that document…you would expect to see the same smudges. Is that why the clipping mask is there…to hide the smudges? Who knows.

And before you say it was simply a scan of the 2nd certified copy, the stamps are in identical placement despite the fact that they are put on manually by a human. The odds of that are low.

In fact, I find it strange that they even made a point of mentioning that 2 certified copies were provided. Especially given the fact that he didn’t hold either or provide an actual certified copy to anyone but Guthrie. But I digress, that is just speculation.

Smoke machines are pretty cool. I am partial to dry ice myself. Oh wait, this isn’t relevant at all. 😉

I know they say they provided paper copies. Are you not on board with the CCP “there was no paper copy” claim, then?

What I’m not aware of is any place where they said they did not make digital copies. I strongly doubt that they did make any, though.

They allowed a press room full of reporters to examine it as much as they wanted to. And not ONE SINGLE REPORTER (including the one from WND) claimed it was a forgery. In fact, the ONLY claims of forgery come from people who have NEVER EXAMINED the document. So, what kind of control tests did you do to show that you could tell a forgery from a real document using only a .PDF?

Kudos for the humor. 😀

That is incorrect. I have shown you a reference that shows that MRC encompasses technology where there may be fewer layers.

I was wondering where you went into hiding after people on Woodman’s blog continued to destroy your arguments.

PS: There is also the HP patent with two layers. So many assertions that are just plain foolish. All you have to offer is that in your limited experiments you have failed to find evidence of a halo and multiple 1-bit layers. That of course is what is know as an argument from ignorance.

The failure to consider OCR further undermines your efforts. But that’s a whole new thread by itself.

http://www.youtube.com/watch?feature=player_embedded&v=XcWQw2AAIho

This guy produced multiple layers with a text document. The layers act suspiciously like the ones on the whitehoue pdf.

But you say that multiple layers are not possible?

He says that multiple 1-bit layers are impossible.

Graphic editing apps or not the only ones that use “unsharp mask” settings. As Dr. C pointed out there are hardware inclusive apps that have settings such as “unsharp mask.”

Garrett, since you claim to be the expert, help me out here.

Just opening the PDF in Acrobat Reader and zooming in, I see things like the “151” in the certificate number is jet black, but when I look at the registrar’s stamp, it’s distinctly lighter gray.

If both those are, as you say, 1-bit layers, then how come they are different colors? I suppose one could have two 1-bit layers with different color palettes, but wouldn’t that be color separation, just optimized?

Were the source documents for the other pdfs printed on security paper? I bet they were all printed on plain paper. This was not a typical doument. They might have tried the typical settings and found it created a poorly legible pdf. Surely you don’t think enhancing legibility is a crime?

Not tech saavy but found this article interesting regarding the scanning size of documents from Preview. They mention options of saving as a jpeg then resaving as pdf in Preview to reduce the size. The file from the WH is “viewable” small, but maybe it wasn’t when first scanned. Just a thought

http://reviews.cnet.com/8301-13727_7-20068107-263.html

Hawaii was copying the original to make the paper documents so why not create a pdf at the same time, since I’m sure the White House told them they were going to post it on the web site. It doesn’t really matter, but I think we should not assume either way.

GPapit:

Lest you think I am a run-of-the-mill Obot, I am not. I am an anti-birther, now. Like you, I strongly suspect the LFBC image was either purposely published in the format it was to keep the Birther issue alive, or done through mistake by somebody trying to enhance the readability. If it is the first of those options, then why play Obama’s game and go around hollering “Forgery!!!”, which is what most Birthers are hollering, not the tamer “Manipulated!!!”

If it is the second option, a simple mistake, which occurred, then again, why run around in circles hollering anything at all? It was just a dumb mistake.

Do you notice how both options have the same “best response,” that is, to just ignore it, and accept the image as containing correct information. Because with Hawaii putting up a link on their state website, and saying he was born there, then there isn’t any way this is going to end up the way Birthers wish.

Those who think Obama is up to something have had 4 years to find some erroneous piece of information on the original COLB, or later LFBC, and to date nothing has been substantially proven to be false, or altered in any material way.

Another point. When someone advocates on behalf of the two citizen parent stuff, that isn’t just wrong. It is delusionally wrong. Case after case slam dunks that nonsense straight into a garbage can.

A bad result from advocating something that is obviously and delusionally wrong, is that it makes people doubt everything else that person says. For example, if Romney was a Moon-Landing Denier, do you think he would be the GOP candidate right now??? And for a real actual example, take Karl Denninger.

I read his blog faithfully, and still do, but ever since he came out as a two citizen parent Birther, in spite of me providing him proof he was wrong, I hold my nose now when I read his blog. I can’t take what he says as true as easily as I once could because he has globbed onto the Vattel nonsense. I mean, if a person can’t read the Reader’s Digest Condensed Version of Wong Kim Ark, which is the Ankeny decision, and figure it out, or the court decisions that followed, then how much can I trust his analysis of financial matters??? Strictly speaking, this isn’t a logical thing, because one grossly dumb error does mean all other decisions are grossly dumb, but I am a human being, and his belief in the Vattel stuff sure affects my opinion of him. Not to mention that he DQ’d me for telling him the truth.

All of this to say to you, if you want more respect for your opinion on pixels and layers, rid yourself of the grossly dumb two citizen-parent stuff. Because it’s a reputation killer. And because it’s the right and smart thing to do.

Squeeky Fromm

Girl Reporter

From the article I linked above, basically I am reading that using Preview and setting it to scan directly to PDF will create a very large file. The WH file is a pdf of about 335KB, hardly anything large.

I don’t have a MAC or really the care to try this, but maybe the initial scanned pdf was too large to upload or even too large for viewing. So it may have been saved as a jpg to compress and then later resaved as a pdf using preview again. This, again, according to the article allows for the compression of the file.

Now, does someone or can someone comment on whether having a large pdf go to a smaller jpg and then to smaller pdf will cause additional layers?

Bingo… Funny how Papit needs to be told…

for the programmer it’s no big deal to go from one layer to several.

So straightforward that I’d assume most software is trying this.

But apparently only in very few cases this gives an improvement.

That is totally erroneous The Mixed Raster Content in its basic concept is a three layer compression solution where foreground and background are encoded in lossy, low DPI and the text is coded as a bitmap.

However, these can trivially be expanded or contracted to include more or fewer layers. For instance, it is much more efficient to encode in monochrome bitmaps with a stroke color set, just as we observe in the WH PDF. This significantly reduces the file size at limited or no expense. This is done by keeping track of regions of texts in different colors and rather than using the expensive jpg compression of a foreground, they are grouped together and then printed as monochrome with a color stroke set.

If there is no background layer then MRC will not have a background layer.

Your overly simplistic understanding of MRC is endearing but in the end it fails to recognize that it is a ‘recipe’ with many flavors and variations.

I showed you a paper in which MRC is described as being able to have more or fewer than the three layers. I have shown you a patent which discloses a two layer separation.

So let me provide you with a logical explanation and observe you, by jumping to a conclusion, may have failed to apply the relevant test.

I predict that the document was scanned and the settings were to optimize for OCR. This requires accurate separation of the text from the background and the removal of horizontal and vertical lines, all common practices in OCR software.

So now we have software which detects regions in which data appear to be similar in color and in font and they are captured in the large bitmap layer. But the software also recognizes areas with stamps which more poorly match the objects found so far.

Let me explain JBIG2 compression to you. It separates the text into letter objects and if it fails to find a similar object in the translation table, it will add one or otherwise it will reuse the existing object, leading to characters looking exactly the same. Accurate text segmentation is incredibly important when trying to do OCR and the data filling we see in your examples where there is no white hole where the text used to be, is not necessary. But this will tend to create halos. But remember this was about OCR.

So where is the OCR then? You yourself have noticed that there is no evidence to be found of the hidden text layer. But you should be aware that workflow used in legal environments are setup to remove metadata as well as the hidden OCR text, as one does not want to make things too simple for the opponent. Furthermore, by printing it at 72 DPI using preview, it will be much harder to do accurate OCR.

So now we have a workflow which explains the segmentation into multiple one-bit layers, why many characters look the same even though on the higher resolution xerox they clearly are different and why there are halos.

But guess what, Palit ‘eliminated’ OCR as there was no hidden text. So all 600+ experiments may very well have been totally missing the point. That’s, as someone called it, stating that one has searched all of the east and west coast of the US and found no evidence of polar bears, to conclude that polar bears do not exist.

Sorry Garrett, but your approach would be so impeachable in court that you may be grateful that your testimony would likely not be admissible.

So your reason to ignore the fact that Guthrie did in fact take a photograph and showed a real document, including the seal is because of some ‘minor’ issues and yet you indict based on even smaller such issues..

As I said, you may count yourself lucky that your testimony would never be allowed in court as you would be so trivial to impeach.

Confirmation bias?

And of course, the documents will show minor differences but they all show the same data.

Why you focused on a highly compressed document is beyond me… Why you confuse indicators of compression and workflow algorithms as ‘forgery’ although one has a hard time explaining why a forger would distribute the letters in such a haphazard fashion.

Sorry my friend but you appear to be quick to ignore evidence that fails to follow your expectations.

As to the Guthrie smudges. Could you be more specific. Ah I see, yes there are some smudges where there are none. Could be a smudge on the paper or or the scanner.

Is that all?

So we agree that the parts that matter all are the same? The data surely looks remarkably similar.

Which of course must be evidence of a forgery…

and of course, as you said, there were two such documents.

There is that argument from ignorance again.

Sigh… We have shown how halos are an inevitable side effect of MRC compression and all you have to offer: “To me, the two aren’t comparable at all” this after stating that MRC does not create halos.

So just to be clear, since I haven’t dived down into this particular bit of arcana before: we’re talking about the two little blops to the right of box 17b? The ones that are either schmutz on the scanner glass or definitive proof that master forgers were at work?

You seem to be proving my point: the paper document shown at the presser cannot have been generated from the PDF.

Ah but the fact that the rest of the document fully agrees is to be ignored in favor of a few smudges. Yes, these are the work of a master forger.

Again, I’d love to be there during the cross…

According to Mark Gillar those smudges are in fact vital statistics codes! And were made with the same pencil as the little numbers that show up in the various boxes.

Only if the smudges are on the document and not on the scanner glass.

Hmmm. Your comment got me thinking…

So in addition to MRC there is the use of unsharp mask…

Check out this image

http://s1.www.scan2docx.com/img/screenshots/filters_zoom.png

Beautiful halos… Would you not agree… Improving legibility… Ahhhh… Wow…

Well, sure. Mark also claims that a 4×4 grouping of pixels, closed at the top, can be determined to be a “u” and not an “a” .

Hmmm

OK, I may have done Mark Gillar a disservice. A few minutes ago I just happened to notice that on my bookshelf here at work, I have a document called

Sekrit 1961 Hawaiian Vital Satistics Birf Codes! Don’t Tell Anyone!

It’s odd that I never noticed this before. I’d love to show this to all of you, but I’m saving that for just the right special time.

Anyhoo, I was looking at Table 42, “Vital Satistic Codes What Should be Used in the Right Margin of Birf Certificates” — and there they were! Both symbols!

Ɉ – SENT BY TELEGRAM – MAKE UP DOCTOR’S NAME AND TIME OF BIRTH

ů – TOTALLY BORN IN KENYA

Nope.

Well… We know that MRC creates halos but so does an unsharpen filter. Funny how they appear to be part of some OCR workflow software.

The plot thickens but thanks Garrett for providing me with the missing link. Remember when I mentioned ‘to improve legibility’? The unsharp mask led me to some interesting discoveries. But it was Garrett’s comment that triggered my interest.

So what if the document was run through an unsharp filter to improve legibility and it was applied a bit aggressively?

If Garrett thinks white, uneven halos can be produced with unsharp mask, let’s see him do it.

Let’s seen a written workflow of steps taken, and results produced.

It would help explain a lot and not really help Garrett’s case.

Unfortunately, it’s one of those proving a negative things. I know it’s not possible using that filter alone, but that is what a few birther’spurts have claimed. Breezily tossing it off….push this button and poof halos.

I know what the filter does and does not do. Combined with other steps, you could get close. The ultimate reason that unsharp mask can’t replicate those layers (by itself) is that is sharpens. Those halos are blurred. If applied to the whole image, it would have sharpened the background. If the text was selected, and the selection sharpened, it would hypersaturate any color selected, not blow it out to white. If applied to the bitmap overlays, it would do nothing.

There are ways to produce those halos, but I’m not volunteering any! 😀

So, until then, I suggest the birher’spurts take the scientific, “Don’t take our word for it, reproduce our work for yourselves” approach. Show us your results, and list the steps. Serve it up for public, crowdsourced, independent verification.

[muzak]

Check out a rough draft here

http://nativeborncitizen.files.wordpress.com/2012/08/hello-world.png?w=661

Not necessarily because the unsharp filter is used by several scanning tools to sharpen the text. So assume that an overly enthusiastic scanner wanted to increase legibility and applied the unsharp filter?

In this case unsharp would be in favor of both forgery and workflow. But I have my ideas.. Again Garrett is running against the problem that a perfectly reasonable workflow exists.

What did you star with? And why cyan? 😛

Yes, but they don’t produce such prominent halos.

Unsharp applied to eagerly will introduce noise., even in greyscale.

GIMP and a lot of ignorance… I am now repeating with a better cut and paste of the original background and will add some text and then unsharpen..

It’s not as impressive as I had initially imagined 🙂

Amateurs… Oops, that would be me…

And it affects the whole picture. Not just the text so the background distorts. And applying it to a text layer does not generate the effect as it does not affect the background.. I wonder what happens when a minor halo is run through MRC…

Oh yes, noise is quite a nasty side effect too.

Having the MRC compression certainly makes things a bit more complex.

I would add what to me is the important point about such things, that one can always get the time from a clock that actually works and not bother trying to figure out when the broken clock happens to be telling the right time (for which determination one would need a working clock anyway). A birther destroys all their relevance on other matters, because there are other people in the world, some who are actually reliable.

Yeah, compression is a one way street. Can’t go backwards, have to recreate. Try playing with this, a half-arsed recreation:

https://picasaweb.google.com/lh/photo/wiVGDMbkHl4jxEFSMXsfQtMTjNZETYmyPJy0liipFm0?feat=directlink

Ooooh it has a pretty seal 🙂

Too much JPotter…

But high resolution.. Now that’s the document I have been looking for. At least we can make some informed experiments happen here.

But also too perfect to be real. It’s a cartoon. I have wondered how birthers would react to it.

Of course it’s a forgery, it says Obama was born in Hawaii, hahaha … but would they correctly explain why it’s obviously a forgery? Or, a recreation, a pastiche. The information is unchanged, the seal is simulated, the presentation is …. ahhh…. customized. 😛

has anyone looked at the pdf-manuals, how these files are structured ,

encoded ? It should be possible to detect, which algorithm

and method were used.

The 8 1-bit-text-layers, which occupy ~ 80000 bytes have twice the resolution as the 8-bit background layer,which occupies 299555 bytes

pdf-1.3 was used, which is an old variant

http://www.digitalpreservation.gov/formats/fdd/fdd000316.shtml

http://wwwimages.adobe.com/www.adobe.com/content/dam/Adobe/en/devnet/pdf/pdfs/pdf_reference_archives/PDFReference13.pdf

~700 pages 🙁

page 13 , 2.2.2 compression

page 36 , filters

flate decode , note 9 in appendix H

flate decode , 3.3.3 , page 43

no hits for “mixed raster”

——————–

the 8 1bit layers have “FlateDecode” ,

the 8 bit layer has “DCTDecode”

What is the copyright for that adobe manual?

1985-2000 that’s a very long time ago in software development.

Here is the 2006 adobe reference manual:

http://www.adobe.com/content/dam/Adobe/en/devnet/acrobat/pdfs/pdf_reference_1-7.pdf

It is 31 mb file versus the 2000 which is a 5 mb file. I guess alot changed in 6 years.

pdf-1.3 was used in the lfbc, to which I provided the correct link

Mixed Raster Content is a model for creating compressed representations of documents developed by Xerox in the late ’90s. It is not tied to any specific file type No reason to expect it to be mentioned in a PDF file spec from Adobe.

Not unusual at all that the WH LFBC was saved as 1.3. saving to an older file format ensures greatest compatibility. It’s standard practice. Very likely the default of whatever hardware created the file. Users running older hardware / software will be able to open it.

Yep. That’s what the Quartz libraries in OS X generate, based on a venerable bit of freeware known as “Derek’s PDF”.

(You can find that string embedded in any Quartz-produced PDF file, if you know how. A hot birfer theory at one time was that the forger must have been named “Derek”… 😆 )

The PDF spec didn’t have to be written to be aware of MRC. MRC was designed so that it could utilize existing file formats that can encapsulate multiple images, such as PDF and TIFF.

GEEJUSSS IN A HANDBASKET!

WHO GIVES A FLYING FORNICATION?

THE INFORMATION ON THE DOCUMENT IS WHAT IS IMPORTANT AND HAWAII HAS CONFIRMED THAT THE INFORMATION REPORTED ON THE PDF IS 100% ACCURATE OVER AND OVER AND OVER AND OVER AGAIN.

GIVE IT A FORNICATING REST FOR CRYSAKES!

There are a lot of decaffeinated brands on the market today that are just as tasty as the real thing.

no Derek in the WH.pdf

*sigh* You have to be tricksy, like Derek was.

(Hint: comment lines begin with “%”)

OK, I found it. resetting bit 7

Excellent! You’ve nearly got this thing blown wide open, now!

(“bit 8”, but whatevs)

OK, I downloaded xpdf and looked at readme.

The included “pdfimages” Seems useful to extract the layers,

I’ll try this.

But these programs start from a pdf, they don’t create pdfs,

why would Derek be in the pdf ?

Quartz creates PDFs.

When you open a document in Preview, the Quartz libraries parse the PDF. Preview then holds the resulting information in its own internal format.

When you then save from Preview, it creates a brand new PDF. And the folks who coded Quartz made use of Derek’s routines.

OK, I think I’m starting to understand where Keith was coming from…

but in what format was it, when Quartzs opened it ?

And I assume,the mixed raster compressing was done before.

So, if it was already a pdf, then why use Quartz ?

Presumably the compressing wrote to another format then.

Or Quartz produced the .pdf which then subsequently was

compressed copying the “Derek”

Oh ho — that’s the $4 million question, isn’t it? (A little bit of “sealing humor”, there.) That’s what Garrett and nbc and everyone are trying to work out, or pretending to work out depending on who we’re talkiung about, punctuated by brief moments of “and exactly why are we doing this again?” lucidity.

Basically, the final Preview save has blasted away most of the clues. We can assume that it was very likely a PDF (could’ve been a TIFF, tho), and that it had eight 1-bit images and one 8-bit image.

A fair assumption.

Because that’s what Macs do.

My theory is that someone received the original document via email on their Mac, and opened it to view it by clicking on it. That invokes Preview, which in turn uses Quartz.

I don’t see why that would be true.

Nope, the “Derek” is a standard part of what Quartz always outputs when it writes a PDF file.

I can’t compile pdfimages and the windows binary doesn’t

work for me either. Maybe someone can upload the layers ?

pdfimages doesn’t work

LW, I don’t understand.

If it was a compressed pdf, then there was no need to use preview or whatever.

If it was a jpg or whatever, then you’d have 9 files to be merged into the pdf ?!

A jpg has no layers, AFAIK.

> My theory is that someone received the original document via email on their Mac,

> and opened it to view it by clicking on it. That invokes Preview, which in turn uses Quartz.

doesn’t make sense to me. When it was already compressed with 9 layers,

then it was a .pdf – what else ? No need to use preview, unless you just only wanted

to add the Derek to it. Or delete the original metadata as the birthers claim.

or the computer that compressed it had no internet connection.

Maybe it was included in the scanner with a cable to upload

the file to the Mac. The webmaster opened it in preview

to check it or because he always did it that way and then

preview uploaded it.

Oh dear god in heaven, F! Stop piling on density, you’ll collapse into a singularity any second now and swallow the earth. Jiminy-chrismas….

Simple office workflow, happens millions of time a day throughout the digitized world.

Document is sent to someone tasked with adding it to a website. They placed it on the office document center, selected their email address, and hit scan. The document center created a PDF from the scan per its software and settings and e-mailed it as an attachment. The person went back to their desk, open the message, and opened the attachment (Mac > default app is Preview > which usees Quartz engine), and then re-saved it, to their local machine or server for later upload to a web server, or directly to the web server. They would open it to see that it was legible and an OK scan prior to upload to the whole world for the President’s announcement.

There may have been add’l steps between the scan and the upload to web server. Insert your conspiracy theories there.

It’s no wonder birthers are terrified of shadows.

This is Occam’s Bizarro World Razor at work.

maybe an additional pdf-compression center

Yes, and maybe they put in clipart of an invisible pink unicorn.

It really, really does not matter.

The point is that despite Garrett Papit and people tons less rational than him (that’s a compliment, Garrett!) claiming anything to the contrary, there are obvious ways the PDF could be the way it is, and certainly nothing that would lead one to believe the State of Hawaii is lying.

And that’s the key part; everything else is smoke screen. In order for “PDF tampering” to be relevant, the State of Hawaii has to have been lying from 2008 through to today. (And even then, I can think of no reason why the PDF would have to have been “tampered”.)

This is what the CCP is claiming.

Explain how the following quote from your report is not biased? For someone honestly attempting to recreate what is found on the WH pdf, but failing, the unbiased and logical conclusion is simple. Unable to reproduce the results.

“Second, there would be a need to cover the digital tracks if this document was tampered

with in an Adobe product. Simply exporting it, as a PDF from Photoshop or Illustrator,

would result in metadata that would show the application used to create it. If Photoshop

or Illustrator metadata existed within this file it would be strong evidence that the

document was altered or digitally compiled. Converting the final product to PDF within

Mac Preview would be a perfect way of ‘erasing’ the ‘digital chain of custody’ that exists

within the file’s metadata. “

It would have been compressed by the document center at the time of the scan. MRC is standard as a “scan for web” default, as it retains best legibility at screen resolutions and smallest possible file size.

So is that why it seems as if I’ve spent only thirty seconds on this blog, but when I walk away from the event horizon (my computer), I find out I’ve lost half a day?

A legal workflow could indeed include saving using Quartz as it prints at 72DPI making it hard to OCR. Of course it was meant to remove hidden data and metadata, it’s part of any workflow in legal and hospital environments where privacy etc are of real concern.

But removing metadata hardly amounts to forgery.

Derek is always there…

Sigh.. Have you bothered to try it yourself?

The actual text is

PDF Comment ‘%\xc4\xe5\xf2\xe5\xeb\xa7\xf3\xa0\xd0\xc4\xc6\n’

XOR(0x80) produces

44:65:72:65:6b:27:73:20:50:44:46

which translates to

Derek’s PDF

LW, yes, but we are still trying to verify the single claims.

So to get insides in their (birthers) motivation and thoughts.

———————————————-

Linda, they (WH) could just have edited the metadata

——————————————–

Potter, it seems that the exact compression routine is not

so standard and maybe only used for different color fonts

and backgrounds. They chose the text part to be 1-bit

and double resolution, realizing that the background

details are not so important.

But that would only be a manual choice of the compression

software or settings, not the compression details.

It would be absurd to split the layers manually this way,

separating words.

——————————————

nbc, they could have a simple utility to remove metadata.(and

add a Derek (and a smileyface))

what’s with d6fc2758ceb2f98f54abce9a4b28fc1c

(repeated) near the end

I have no Mac, am not familiar with it.

——————————————–

I finally succeeded with pdfimages,exe, I still have to shift

the layers for perfect overlay. I’ll post it later.

I didn’t realize the 2 last last layers had the white points,

thought they were empty. So, were the layers chosen by color

of blocks, connected components ?

Maybe that’s what you’re doing. I’ve already seen enough of their motivations and thoughts to last a lifetime.

Since the birthers themselves do not know why the PDF is the way it is, finding out why cannot give us any insights to their motivations.

The Derek is a metadata tag added to PDF check the internet. Why use a ‘utility’ when it’s part of the workflow?

The trailer is found in all documents. It’s an ID but I am not sure at this time what it represents

d6fc2758ceb2f98f54abce9a4b28fc1c

Check out the PDF spec, it’s online, even to non Mac users

Sample trailer

Also

/ID (Optional; PDF 1.1) An array of two strings constituting a file identifier (see Section 9.3, “File Identifiers”) for the file.

Why can birthers not self-educate?

I do not believe they are white. They are created using a color setting. Check out the actual code that paints the object. If you just paint the bitmap, make sure it is given the right color.

The blocks are chosen by how close the text color is. Which helps understand why it contains much of the stamps in the document.

LW, do they really not know it better or are they making wrong stuff up

deliberately ?

—————–

nbc, because a utility can run from batch, can be easily adapted for different

outputs. thanks for note 106

———————

let’s see whether we can reproduce their choice of layers

———————-

I produced an overlay of the 2 big layers as the original

pdf–>bmp resolution , 2552×3304

hmm, is it correct ? I can’t find that size with google

the bmp is 25MB

here I found a 2550×3300

http://www.sandraoffthestrip.com/wp-content/uploads/birth-certificate-long-form-8.jpg

here the 6 text layers at 2500×3300:

http://obamabc.files.wordpress.com/2012/05/obamabirthcertificate_white.jpg

and the background at 2500×3300:

http://www.palmettoinc.com/after.jpg

But a horrible solution for workflow.

These are not mutually exclusive options.

They clearly do not know, or they would say. And we’ve caught them making stuff up, time and time again.

Here is what Mara Zebest points to after opening the pdf in wordpad, for one of the 1-bit layer,

“/Length 10 0 R /Type /XObject /Subtype /Image /Width 1454 /Height 1819/ImageMask true /BitsPerComponent 1 /Filter /FlateDecode ”

Here is what the PDF Reference Manual says about “ImageMask” and “BitsPerComponent”

“An image mask (an image XObject whose ImageMask entry is true) is a monochrome image in which each sample is specified by a single bit. However, instead of being painted in opaque black and white, the image mask is treated as a stencil mask that is partly opaque and partly transparent. Sample values in the image do not represent black and white pixels; rather, they designate places on the page that should either be marked with the current color or masked out (not marked at all). Areas that are masked out retain their former contents. The effect is like applying paint in the current color through a cut-out stencil, which lets the paint reach the page in some places and masks it out in others.”

“An image mask differs from an ordinary image in the following significant ways:

•The image dictionary does not contain a ColorSpace entry because sample values represent masking properties (1 bit per sample) rather than colors.

•The value of the BitsPerComponent entry must be 1.

•The Decode entry determines how the source samples are to be interpreted. If the Decode array is [ 0 1 ] (the default for an image mask), a sample value of 0 marks the page with the current color, and a 1 leaves the previous contents unchanged. If the Decode array is [ 1 0 ], these meanings are reversed.”

So the eight 1-bit layers are treated like stencils through which the color is poured. To use the language of Mixed Raster Content.

Hope this is meaningful.

They could but they got stuck on self-medicate.

each “pixel” is usually encoded by 3 bytes, 3 integer values between 0 and 255

for red,green,blue. when all 3 are 255, you see it as white, when they are all 0,

you see it as black. Areas with similar values for red,green,blue were

combined into layer, the layer-area then was compressed and assigned

that similar value, so every pixel in that area now has exactly the same value

as any other in the area. This process allows an efficient compression.

The rest (background) has too many different colors and was compressed

with another method. Although this methos is apparently not very common,

it makes mathematically much sense and is not, what a forger might even

get the idea to do. He might want to put the stamps and signature into

different layers, yes, but that’s another process and he would have deleted

the tracks once done. The separation in the pdf can be explained by the color

values separation. (my current impression, I may have to examine this further

unfortunately we don’t have the uncompressed scan that they started with)

Wrong. There is a 4th byte for the alpha-channel, the transparency level.

This nitpicking about pixels and compression and PDF details makes me feel like I’m in a dull episode of Seinfeld.

I know. I appreciate the foreigner is apparently trying to educate himself, but this discussion is so last summer.

Seen the new Perriér commercial? Pretty awesome.

Since the idea of forgery is entirely ludicrous and asinine because the information has been verified by the state of Hawaii, it’s more likely a symptom of bizarre and manipulative attention whoring than honest inquiry. The posting history says troll. And it’s obviously Obama’s fault.

What I don’t see is what’s all the fuss about the birth certificate. Birthers haven’t finished their forensic analysis of the Constitution. It doesn’t even have a raised seal. All they have are pictures on the Internet.

The State of Hawaii cannot forge its own documents. Once the State says the document is a genuine record of a fact, that is the end of the discussion. Fighting about a picture of that genuine document is nuts.

Interesting observation. And the number of hidden smileys and freemason symbols is still unknown.

Technically, there is only one layer in the long form PDF. Adobe Illustrator says plainly: “Layers: 1.” There are multiple clipping groups. And as you say all of the one bit objects are not images, but image masks denoting patterns of the same color.

I believe Mark Gillar looked at the first page of the Constitution and declared that the smudge marks must be “vital statistic codes”.

http://www.archives.gov/exhibits/charters/constitution_zoom_1.html

And on the same page, (Article 1 Section 3 Clause 6) the line about impeaching the President, someone clearly added the words “is tried,” after the original document was written.

Forensic examination of the ink to determine the age will tell us if those words were added in 1787 or later.

“We the People” is clearly not in the same font as the rest of the document. Could quill pens at that time even make such large bold letters?

Finally, there should be at the bottom of the each page a notation – 1 of 4, 2 of 4, etc. and each page needs to initialed by the Framers to show they are in agreement with each page.

None of the anomolies taken by themselves are meaningful but taken in their totality suggests some weird sh!t going on in Philadelphia.

One last point, the laws of Pennsylvania in 1787 allowed anyone to issue a Constitution without evidence that they were American citizens.